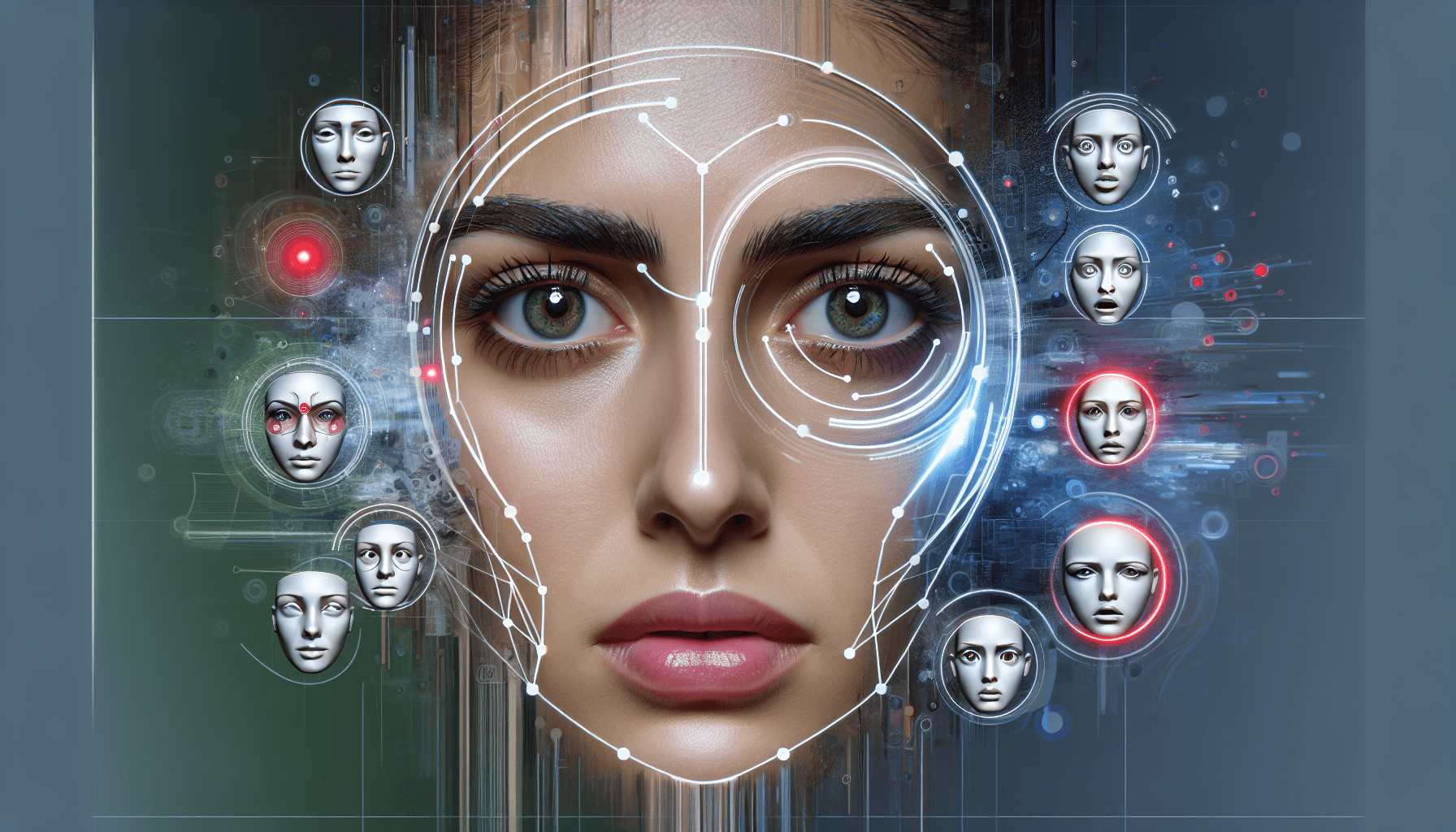

Imagine having a virtual assistant that can understand not just your words, but also your emotions. Facial Expression Analysis for emotionally intelligent virtual assistants takes artificial intelligence to a whole new level. This groundbreaking technology allows virtual assistants to analyze your facial expressions and interpret the emotions behind them. With proper H1, H2, and H3 tags, this article will dive into the fascinating world of emotionally intelligent virtual assistants, covering everything from the science behind facial expression analysis to its practical applications in various industries. By the end, you’ll be convinced that the future of virtual assistants is not just about efficiency, but also about empathy.

Overview of Facial Expression Analysis

Definition of facial expression analysis

Facial expression analysis is the process of detecting and interpreting facial expressions to gain insight into an individual’s emotions and thoughts. It involves the use of computer vision and machine learning techniques to analyze the various subtle movements and changes in the face, such as eyebrow raises, smiles, or frowns, which can convey a person’s emotional state.

Importance of facial expression analysis in virtual assistants

Facial expression analysis plays a crucial role in developing emotionally intelligent virtual assistants. By accurately recognizing and understanding facial expressions, virtual assistants can gain insights into users’ emotions, allowing them to respond appropriately and provide more personalized interactions. This technology enhances the user experience, making virtual assistants more engaging, empathetic, and effective in their communication.

How facial expression analysis works

Facial expression analysis involves several steps. First, the system captures an image or video of the user’s face using a camera or webcam. Then, the system uses computer vision algorithms to detect and extract facial landmarks, such as the position of the eyes, nose, and mouth. These landmarks are used to analyze the movement and shape changes in the face, which are indicative of different emotions. Machine learning and deep learning techniques are employed to classify the detected facial expressions into specific emotions, such as happiness, sadness, or anger. Real-time analysis allows virtual assistants to respond in real-time to the user’s emotional cues.

Emotional Intelligence in Virtual Assistants

Explanation of emotional intelligence

Emotional intelligence refers to the ability to recognize, understand, and manage emotions, both in oneself and in others. It involves being aware of one’s own emotions, empathizing with others, and effectively regulating emotions to guide thinking and behavior. Emotional intelligence plays a crucial role in human-human interactions, and its integration into virtual assistants aims to enhance their ability to understand and respond to human emotions.

Integration of emotional intelligence in virtual assistants

By incorporating emotional intelligence into virtual assistants, these AI-powered systems can better perceive, understand, and respond to the emotional states of their users. This involves not only recognizing facial expressions but also considering vocal tone, body language, and context to form a holistic understanding of emotions. Virtual assistants can then adapt their responses and behavior accordingly, offering empathy, support, and personalized assistance based on the user’s emotions.

Benefits of emotionally intelligent virtual assistants

Emotionally intelligent virtual assistants offer numerous benefits. They can provide more relevant and empathetic responses, creating a more comfortable and engaging user experience. These assistants can also assist with emotional well-being, providing support and guidance in times of stress or sadness. Additionally, emotionally intelligent virtual assistants can be valuable in healthcare, therapy, and education, where personalized emotional support can be crucial for individuals’ well-being and learning outcomes.

The Role of Facial Expressions in Emotional Intelligence

Understanding the connection between facial expressions and emotions

Facial expressions are a universally recognized form of non-verbal communication that plays a crucial role in conveying emotions. The face contains various muscles that contract and relax to produce different expressions. Facial expressions are closely tied to specific emotions, such as a smile indicating happiness or a frown indicating sadness. Analyzing facial expressions allows virtual assistants to gain valuable insights into the user’s emotional state, enabling them to respond appropriately.

Why facial expressions are important for emotional intelligence

Facial expressions provide valuable cues about a person’s emotional state, sometimes even when their words fail to do so. Integrating facial expression analysis into virtual assistants allows for a more comprehensive understanding of the user’s emotions, providing a deeper level of empathy and connection. By recognizing facial expressions, virtual assistants can tailor their responses, offer appropriate support, and effectively deliver the desired emotional experience to the user.

Research on facial expressions and emotional intelligence

Extensive research has been conducted on the link between facial expressions and emotional intelligence. Studies have shown that individuals with higher emotional intelligence are more accurate in recognizing and interpreting facial expressions. This research has contributed to the development of sophisticated algorithms and models that can accurately analyze and classify facial expressions, providing virtual assistants with the ability to understand and respond to users’ emotions.

Techniques for Facial Expression Analysis

Traditional computer vision techniques for facial expression analysis

Traditional computer vision techniques for facial expression analysis involve the extraction of facial features, such as eye and mouth regions, using various image processing algorithms. These features are then fed into machine learning models that classify the expressions into different emotion categories. While these techniques have been effective in certain settings, they often rely on handcrafted features and may struggle to capture the complexities and subtle nuances of facial expressions.

Machine learning approaches for facial expression analysis

Machine learning approaches for facial expression analysis involve training models on large datasets of labeled facial expressions. These models learn to recognize patterns and correlations between facial features and emotions. Machine learning algorithms, such as Support Vector Machines (SVM) or Decision Trees, are commonly used for classification tasks. These approaches have shown promising results, but they still require careful feature engineering and may struggle with variations in lighting conditions or head poses.

Deep learning methods for facial expression analysis

Deep learning methods, particularly Convolutional Neural Networks (CNN), have revolutionized facial expression analysis. These models learn directly from raw image data, automatically extracting relevant features and hierarchies of information. By leveraging large datasets, deep learning models can achieve exceptional accuracy in facial expression analysis. They can capture complex patterns and subtle variations in facial expressions, making them highly suitable for real-time applications in emotionally intelligent virtual assistants.

Challenges and Limitations of Facial Expression Analysis

Accuracy and reliability of facial expression analysis

One of the key challenges in facial expression analysis is achieving high accuracy and reliability. Analyzing facial expressions is inherently complex, as emotions can differ significantly between individuals and can be influenced by various internal and external factors. Ensuring the accuracy of facial expression analysis algorithms requires robust training datasets, careful validation, and continuous improvement of the models to account for individual differences and context-dependent expressions.

Variability in facial expressions across different individuals and cultures

Facial expressions can vary widely across individuals and cultures due to factors such as personal traits, upbringing, and cultural norms. This variability poses a challenge for facial expression analysis algorithms, as they need to account for these individual and cultural differences to accurately interpret emotions. Ongoing research aims to develop more culturally inclusive models that can adapt to individual nuances, ensuring that virtual assistants can effectively analyze and respond to facial expressions from diverse users.

Ethical considerations in facial expression analysis

Facial expression analysis raises important ethical considerations related to privacy, consent, and potential misuse of facial expression data. Proper measures must be in place to ensure that user privacy is respected, and explicit consent is obtained for collecting and analyzing facial expression data. Transparent and responsible use of facial expression analysis technology is necessary to prevent potential biases and protect user rights. Ongoing dialogue and regulation are essential to address these ethical concerns and ensure the responsible development and deployment of facial expression analysis algorithms.

Applications of Facial Expression Analysis in Virtual Assistants

Enhancing user experience through emotion recognition

Facial expression analysis enables virtual assistants to enhance the user experience by recognizing and responding to emotions. By adapting their responses and behavior to match the user’s emotions, virtual assistants can create a more engaging and personalized interaction. For example, if a user expresses frustration, the virtual assistant can offer assistance or provide calming suggestions. Emotion recognition through facial expression analysis opens up a wide range of possibilities for creating more emotionally engaging virtual assistants.

Improving personalized responses based on emotional cues

By analyzing facial expressions, virtual assistants can gain insights into the user’s emotional state and preferences. This enables them to tailor their responses and recommendations to match the user’s needs and interests. For example, if a user appears sad, the virtual assistant can offer comforting words or suggest activities that may uplift their mood. Facial expression analysis enhances the personalization and customization capabilities of virtual assistants, making them more effective in meeting individual needs.

Adapting virtual assistant behavior to match user emotions

Facial expression analysis allows virtual assistants to adapt their behavior and communication style based on the user’s emotions. Virtual assistants can modulate their vocal tone, pace, and choice of words to better connect with the user and provide the desired emotional support. For instance, if a user appears stressed, the virtual assistant can adopt a more calming voice and offer mindfulness exercises. By mirroring and responding to the user’s emotions, virtual assistants can establish a more empathetic and supportive connection.

Real-World Examples of Facial Expression Analysis in Virtual Assistants

Case study: Emotionally intelligent virtual assistant in customer service

In customer service, facial expression analysis can be used to enhance the interactions between virtual assistants and customers. For example, a virtual assistant in a call center can analyze the customer’s facial expressions to gauge their satisfaction level. If the virtual assistant detects signs of frustration or dissatisfaction, it can promptly offer assistance or escalate the issue to a human agent. This real-time analysis enables virtual assistants to provide more personalized and empathetic support, resulting in improved customer satisfaction.

Case study: Virtual therapist utilizing facial expression analysis

Facial expression analysis can be instrumental in developing virtual therapists that provide emotional support and therapy. By analyzing the patient’s facial expressions during therapy sessions, the virtual therapist can assess their emotional state and adapt the therapy accordingly. If the virtual therapist detects signs of distress or sadness, it can modify the therapy techniques or provide additional support resources. Facial expression analysis allows virtual therapists to offer personalized and targeted interventions, making therapy more effective and accessible.

Case study: Emotional companion virtual assistant for individuals with special needs

Facial expression analysis can be applied in the development of emotional companion virtual assistants for individuals with special needs. For example, an emotional companion virtual assistant can support individuals with autism by analyzing their facial expressions and offering appropriate interventions. If the virtual assistant detects signs of anxiety or sensory overload, it can guide the user through relaxation techniques or suggest sensory-friendly activities. Facial expression analysis enables virtual assistants to provide tailored emotional support, improving the well-being and daily functioning of individuals with special needs.

Future Developments in Facial Expression Analysis

Advancements in facial recognition technology

Facial expression analysis is poised for significant advancements with the continuous improvement of facial recognition technology. Innovations in high-resolution cameras, advanced algorithms, and hardware acceleration are making real-time analysis more accurate and feasible. The integration of depth-sensing technologies, such as 3D cameras or LiDAR, can provide even more precise facial information, enabling virtual assistants to capture subtle facial movements and micro-expressions. These advancements will further enhance the performance and capabilities of facial expression analysis algorithms.

Integration of facial expression analysis with other forms of emotional analysis

To create more comprehensive emotionally intelligent virtual assistants, facial expression analysis can be integrated with other forms of emotional analysis, such as voice recognition and sentiment analysis. By combining multiple modalities, virtual assistants can gain a deeper understanding of the user’s emotional state and intentions. This integration can enable more nuanced and context-aware responses, enhancing the virtual assistant’s ability to provide empathetic and personalized support.

Potential applications in healthcare, education, and entertainment

Facial expression analysis holds immense potential for various domains beyond virtual assistants. In healthcare, it can be utilized for early detection of mental health disorders, pain management, and assessing patient well-being. In education, facial expression analysis can support personalized learning, adaptive tutoring, and student engagement assessment. In entertainment, it can enhance gaming experiences, enabling virtual characters to respond and interact based on the user’s emotions. Continued advancements and applications in these areas will reshape how technology interacts with and understands human emotions.

Ethical Considerations in Facial Expression Analysis

Privacy concerns related to facial expression analysis

Facial expression analysis raises concerns regarding privacy and the collection of sensitive data. As facial expression analysis relies on capturing and analyzing images or videos of individuals’ faces, proper measures should be in place to ensure the protection of user privacy. It is crucial to obtain explicit consent and provide transparent information about how facial expression data will be used, stored, and shared. Privacy safeguards, such as data anonymization and secure storage, must be implemented to protect user confidentiality and prevent unauthorized access.

Fairness and bias in facial expression analysis algorithms

Facial expression analysis algorithms need to be fair and unbiased to ensure equitable treatment across individuals and diverse populations. Biases can arise from imbalanced training data or inadequate representation of underrepresented groups. It is essential to address these biases during algorithm development by using diverse and inclusive training datasets. Regular auditing and testing of facial expression analysis algorithms can help identify and correct any biases that may arise. Responsible development and continuous improvement of these algorithms promote fairness and prevent potential discrimination.

Responsible and transparent use of facial expression data

Responsible use of facial expression data involves employing appropriate security measures, adhering to privacy regulations, and ensuring transparency in data handling practices. Users should have control and autonomy over their facial expression data, with the option to opt-in or opt-out of data collection and analysis. Virtual assistant providers need to communicate clearly about the purpose and use of facial expression data, fostering trust and confidence among users. Responsible and transparent use of facial expression data is vital for maintaining user trust and ensuring the ethical use of this technology.

Conclusion

Facial expression analysis plays a central role in the development of emotionally intelligent virtual assistants. By recognizing and interpreting facial expressions, virtual assistants can better understand and respond to users’ emotions, creating a more engaging and personalized experience. The integration of emotional intelligence enables virtual assistants to adapt their responses, offer emotional support, and enhance user satisfaction. Despite challenges and ethical considerations, advancements in facial expression analysis technology continue to drive its application in various domains, from customer service to healthcare and entertainment. The future holds immense potential for leveraging facial expression analysis to empower virtual assistants with a deeper understanding of human emotions, facilitating more empathetic and effective interactions.